Table of contents

About this series

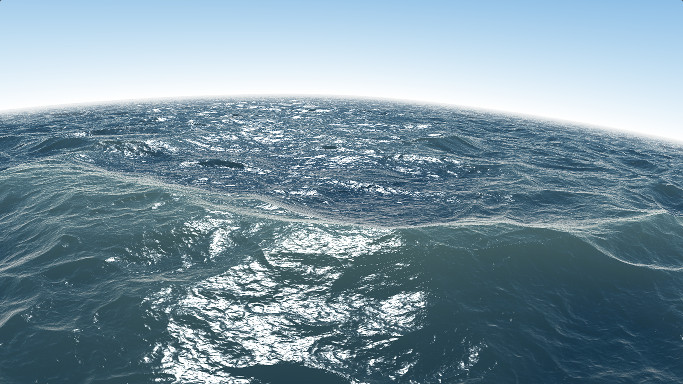

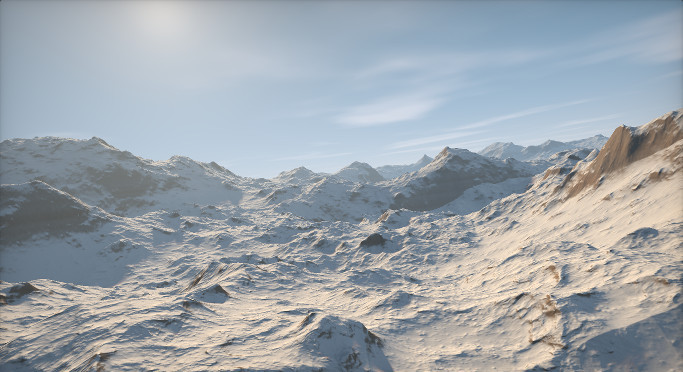

What we aim to achieve is making shaders more impressive than these:

Something I didn’t do yet, but I have the will and dedication needed to take these apart and make them into tutorials. Needless to say, it should take lots of time and effort, but it’s doable and it’s worth it. Although these types of shaders are not usually directly used in games, but studying them should undoubtedly make you more comfortable writing shaders for games. It’s like, the next and far more superior level of awesomeness 😀

Introduction

The fixed pipeline

Back in the old days when we needed to draw something 3D, the graphics hardware had to explicitly support it. So, to draw a scene with a light source, the graphics hardware had to support drawing with at least one light source, then the light source position, orientation, color, intensity, attenuation and type (point, parallel or spot) had to be communicated to the graphics hardware through the graphics API (like DirectX or OpenGL). If you needed shadows, the graphics hardware had to support drawing shadows, and you go like: Enable shadows with the following properties bla bla bla…

That was called the fixed pipeline. You want to do lights? Here’s how to do them, bla bla bla. Textures? Bla bla bla. Transformations? Bla bla bla. And the list goes on. It’s customizable, but it’s still fixed.

Hidden flexibility

Since whether a certain feature was available was dependent on whether the hardware/driver in question supported it or not, one might conclude that these features were implemented in hardware. Something like: here’s the logic circuit that does lighting. Well most of the time, it didn’t work this way.

There’s a lot in common between different drawing operations that hardware designers wouldn’t want to duplicate. For instance, most drawing operations need floating point arithmetic. Giving each operation its dedicated arithmetic units would be a waste. So instead of making large blocks that do certain operations, they provide small units of functionality which if put together, would make more meaningful operations.

How these units are used to provide the end functionality is specified through a program. This program could be stored on a ROM (or the likes) in the graphics hardware, or written in the software driver shipped with the hardware. So what appeared to be a fixed pipeline was -most of the time-, a programmable pipeline, just not exposed by the graphics API.

The dawn of shaders

Then this brilliant idea occured. Why bother implementing every single feature an effects programmer might want in the hardware or in the driver? Why do the APIs have to grow larger and larger and less flexible in order to add more features? Why not just expose the programmable nature of the graphics hardware to the programmers and let them do whatever they wanted with it, keeping the APIs lean and clean and giving the programmers more control over their pipeline?

As of DirectX 8 and OpenGL 2.0 (or OpenGL 1.4 with shader extensions), the programmers were granted the option of writing their own shading programs (in later versions, it became their only option). However, not all the graphics pipeline was made programmable, only the vertex and fragment (pixel) processing parts. The programmers could provide their own vertex and fragment “shaders” to do these. In general, shaders are the programs used to determine the color of every pixel in a computer generated image.

Later, the pipeline became longer and more sophisticated to allow for more complicated effects, and exposed more types of shaders that fit into the pipeline. By that time, people started to recognize graphics hardwares for their massively parallel nature and huge computing capabilities, so non-graphics APIs were defined to give programmers more control on the hardware on a whole new level, allowing other applications to run on the graphics hardware.

This series of tutorials is about painting with graphics shaders (the programs that give pixels their final colors). So, lets paint some colors!

Our first shader

Wow! We just made a shader that colored every pixel in the above canvas green! Now lets take a look at this program.

void main(void) {

Looks like C, huh? Well it definitely does, but it is still different in quite a lot of things. This is GLSL (OpenGL Shading Language), the programming language used to write OpenGL shaders. If you are writing DirectX shaders, you should probably use HLSL (High Level Shading Language). In this series of tutorials we will only use GLSL, but translating it into HLSL should be straightforward.

Now back to the code,

void main(void) {

This is a fragment shader. It’s a program that runs once for every pixel that is drawn to the screen. Well, not exactly. It could be run more than once for the same pixel, like for example, if there’s transparency. The color of the object in the back has to be decided first before the transparent pixel is drawn above it.

Actually it’s not just about color, this shader could also be responsible for the depth and the transparency level. And also for whether this pixel will be drawn at all or just discarded altogether. In fact, this might not be a pixel on the screen at all. It might be a hidden buffer used to deduce depth values for pixels that are used later to make shadows. Or maybe some sort of object id used to identify which object each pixel is part of. You know what? For all we know it could be a “fragment” of a pixel. When using MSAA (multi-sampling anti-aliasing), each pixel is sampled multiple times at slightly different locations and the final pixel color is a weighted sum of all these samples. So we are not really talking about pixels, more about fragments. Hence the name “fragment shader”.

Now LETS GET BACK TO THE CODE!

void main(void) {

This is the entry point of the fragment shader. It takes no parameters and returns no values. However, there are a lot of inputs to the fragment shader and possibly quite a lot of outputs as well. Since they are not always used, we won’t go much into their details now.

gl_FragColor = vec4(0.0, 1.0, 0, 1.0);

Here’s an output from the fragment shader. We didn’t declare it, it’s built-in. The “gl_FragColor” will hold the value that is to be written to the drawing buffer (if there’s only one bound during drawing). In the common case (our case) this will be a color buffer denoting the final look of the image. In other words, this is where we write the color of the fragment.

Notice how the numbers are written. You can see that we’ve explicitly made them all floats. Now try to compile the following shader:

What did you expect 😀 Honestly, I can’t predict what happened! You see, this may or may not work, it depends on your hardware and drivers. Regular OpenGL supports implicit conversions. OpenGL ES and WebGL (which are almost the same) don’t support implicit conversions. Since you are most probably reading this tutorial in a web page, then it shouldn’t work.

But what if it works?! This is one of the worst nightmares graphics developers have constantly seen since the dawn of graphics hardware. Some hardware vendors don’t follow the specifications strictly. You could easily end up writing code that runs very well on your hardware, but fails to run on other machines. Failing to function when the standard is not followed correctly is just as important as functioning properly when it’s followed.

Therefore, ALWAYS follow the specification, even if your code works just fine. The OpenGL ES specification explicitly states that implicit conversions are not supported, and regular OpenGL won’t mind if you write the types explicitly. Thus, for maximum compatibility, never depend on implicit conversions.

To convert from one type to another we use conversion constructors, like:

gl_FragColor = vec4(float(0), float(1), float(0), float(1));

But since there’s absolutely no point in doing this here, we’ll just get back to our original code,

gl_FragColor = vec4(0.0, 1.0, 0, 1.0);

The second thing to notice is the “vec4” part. Hardware designers know that graphics programs make extensive use of vectors and matrices. Lets say, I want to add the positions of two points in 3D space. Normally this would take 3 additions, one to handle the x-axis, one for the y-axis and one for the z-axis. Thus, I’m using the addition circuit 3 times in a row. That’s 3 times the time of a single addition. Instead of doing this, most hardware would provide 3 addition circuits, allowing doing this vector operation in one go.

Today, a single graphics chip could have hundreds of entire cores with dedicated ALUs (Arithmetic and Logic Units) to handle multiple pixels in one go. Rather than doing pixel by pixel, the hardware runs your code a bunch of times in parallel without you noticing.

To sum this up, good:

gl_FragColor = vec4(0.0, 1.0, 0, 1.0);

bad:

gl_FragColor.r = 0.0; gl_FragColor.g = 1.0; gl_FragColor.b = 0.0; gl_FragColor.a = 1.0;

fast:

gl_FragColor = vec4(0.0, 1.0, 0, 1.0);

slow:

gl_FragColor.r = 0.0; gl_FragColor.g = 1.0; gl_FragColor.b = 0.0; gl_FragColor.a = 1.0;

You get the point! In some rare situations, the graphics hardware is so pathetic that it doesn’t have vector instructions (like in old smartphones). In such case, these two forms are equivalent, so just do it the vector way to be on the safe side.

Now back to the code (this is the last time, I promise),

gl_FragColor = vec4(0.0, 1.0, 0, 1.0);

The “vec4” part is a vector constructor. It makes a floating point vector from the parameters specified. You don’t have to worry about managing the generated vector. Think about it as a temporary variable or a register. A good shader compiler might assign the values to the left hand side directly without using a temporary vector at all.

Depending on the hardware, this could depend on whether you set the “gl_FragColor” output once or multiple times throughout your shader. If you assign it a value multiple times in different places in the shader, a temporary may be created and used instead of the actual “gl_FragColor” and is assigned to the actual “gl_FragColor” once at the end of the program.

Shader compilers are not necessarily the best compilers in existence. Bugs, issues and not very well optimized code are not uncommon. They have to be good and fast, since shader code is typically compiled in run time. Before actually running the shader, there’s no knowing what type of hardware is going to run it, so shader code is shipped with the games/apps using it and is compiled on the fly.

That’s why manual optimizations work! In simple shaders on weak hardware, saving a temporary could significantly affect the frame-rate. Every operation counts.

This concludes our simple shader. Go ahead and try changing the color. Draw something .. red .. or blue .. or reddish blue 😀I hope this made you more familiar with shaders. In the following tutorials -if Allah wills- we’ll be doing more than just coloring the entire screen. We shall do it one tiny step at a time. Thank you for reading to the end 🙂 Join our facebook group to get more updates and to discuss shaders.

References

- https://www.khronos.org/registry/gles/specs/3.0/GLSL_ES_Specification_3.00.3.pdf

- https://www.khronos.org/registry/webgl/specs/latest/

- https://www.opengl.org/wiki/Core_Language_(GLSL)

- https://www.opengl.org/wiki/Fragment_Shader

- https://www.opengl.org/wiki/Built-in_Variable_(GLSL)

- https://www.opengl.org/wiki/Data_Type_(GLSL)

- https://en.wikibooks.org/wiki/OpenGL_Programming/Shaders_reference